Instructions for test algorithms

One of the main goals of the REVERB challenge is to inspire and evaluate diverse ideas for speech enhancement and robust automatic speech recognition in reverberant environments. Therefore we limit the instructions to those that are necessary to ensure that the tasks reproduce realistic conditions to the extent feasible. Furthermore, the following instructions are meant to provide a common understanding for all participants in both speech enhancement and automatic speech recognition tasks.

The following instructions summarize, what information is allowed to be exploited and what information is not.

Information that is allowed to be exploited

- No room changes within a single test condition (room type and near/far position in the room). It can be assumed that the speakers stay in the same room for each test condition.

- Full batch processing/utterance-based batch processing/Real-time processing: Either full batch processing, utterance-based batch processing or real-time processing can be used.

- In case of full batch processing, it is possible to use all utterances from a single test condition (room type and near/far position in the room) to improve the performance. This allows e.g. multiple passes on the data of a single test condition until the final results are achieved. It also allows that all the utterances can be exploited to extract the room parameters.

- In case of utterance-based batch processing, all the processing has to be performed separately for each utterance.

- In case of real-time processing, processing should be achieved using information from past and future frames up to some processing delay fixed by the participants that should be clearly mentioned. Moreover, the processing has to be performed separately for each utterance.

- Training data: It is possible to use the clean training data, all training data from the other rooms provided by the REVERB challenge, and if you want to extend the training set, also training data from any other rooms to obtain multi-condition acoustic models for speech recognition. However we encourage the participants to also provide results with the training data (clean and multi-condition) provided by the challenge in the ASR task.

- When using the multi-channel recordings, the array geometry (distance of 2 microphones and radius of the circular array) will be provided and may be used for multi-channel processing. The evaluation scores should be submitted along with the number of microphones used.

Information that is not allowed to be exploited

- Speaker identity: We assume that the speaker identity is unknown during test. That means that for testing, it is not allowed to use speaker identity and e.g. supervised speaker adaptation cannot be used. However, it is allowed to blindly estimate speaker identity. For example, in this case, for the full-batch processing category, it is allowed to estimate speaker adaptation parameters in a single condition using multiple test utterances that are blindly assigned to the same speaker. Note that, for training, it is allowed to use speaker identity.

- When doing processing of a given test condition, it is not allowed to use test data from other test conditions to extract room parameters, to perform adaptation, or to help improve performance in any way. For example, it is not allowed to use models adapted on a given condition of the development set for processing of the evaluation sets.

- Room parameters: The parameters of the room are assumed to be unknown. These parameters include: the reverberation time, parameters that describe the relation between direct sound and reverberation, e.g. direct-to-reverberation ratio, D50, C50, the room impulse response(s). If your approach requires (one or several of) these parameters, they have to be estimated from the test data using unsupervised approaches. This restriction is introduced to make the challenge as realistic as possible. Note that for many applications, the ground truth about the room parameters is not available to the system.

- Speaker position: We assume that the speaker can move from one position to another within a single test condition. That means that the speaker position may be different for different utterances within one test condition. However the position of the speaker is fixed within each utterance and this can be assumed for system development.

- The clean test data (in case of RealData this corresponds to the close-talking recordings) for both the development and evaluation sets cannot be used at all, except for the calculations of the enhancement evaluation metrics (CD, LLR, FWSegSNR and PESQ).

- The transcriptions of both the development and evaluation sets can only be used to calculate WER. For example it is not allowed to perform supervised adaptation based on the transcriptions or to perform forced HMM state alignment.

Instructions for result submission

Instructions for Enhancement task

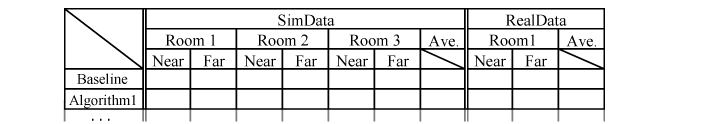

- The participants for SE challenge will be required to process both SimData and RealData, and evaluate the performance in terms of objective measures that can be calculated by the tools provided by the challenge organizers and fill table below for each evaluation metric

- For SimData, please evaluate the performance in terms of CD, LLR, Frequency-weighted segmental SNR, FWSegSNR, and SRMR and prepare table for each criterion. Use of optional measures (WER and PESQ) is also encouraged.

- For RealData, please evaluate the performance in terms of SRMR and prepare a table for it. The results of subjective evaluation test will serve as an another evaluation criterion for RealData. Use of WER is also encouraged.

- Participants should describe in the paper the computational complexity or latency of the evaluated algorithms, which is also an essential evaluation criterion to attest the practicality of the method.

- For the SimData, the first channel should be always considered as a reference channel. The first channel is time-aligned with the corresponding clean speech, and SE evaluation tools provided by the challenge work based on this assumption. Those who evaluate their SE algorithms should generate output speech data such that it aligns with the 1st channel.

Instructions for ASR task

- The participants for ASR challenge will be required to process both SimData and RealData, and evaluate the performance in terms of word error rate and fill the table below.

- The participants should mention whether they use full batch-processing, utterance-based batch processing, or real-time processing.

- Although multi-condition training data will be provided, the participants are strongly encouraged to show the results with clean training data only if their algorithms are able to work only with it.

- It is also strongly recommended to describe the computational complexity or latency of the evaluated algorithms, which can attest their suitability for real-time processing.

Fig: An example of result table